Problem

From the start of working on the AI assistant, we found the complexity of actually building an autonomous assistant that could answer accurately questions about the user based on his integrations, create tasks and calendar events that corresponded to user requests. The problem of accuracy and usability lies in the fact that self-directed actions by the agents require a lot more background work and testing by us before claiming the system can offer a solution.

Challenges

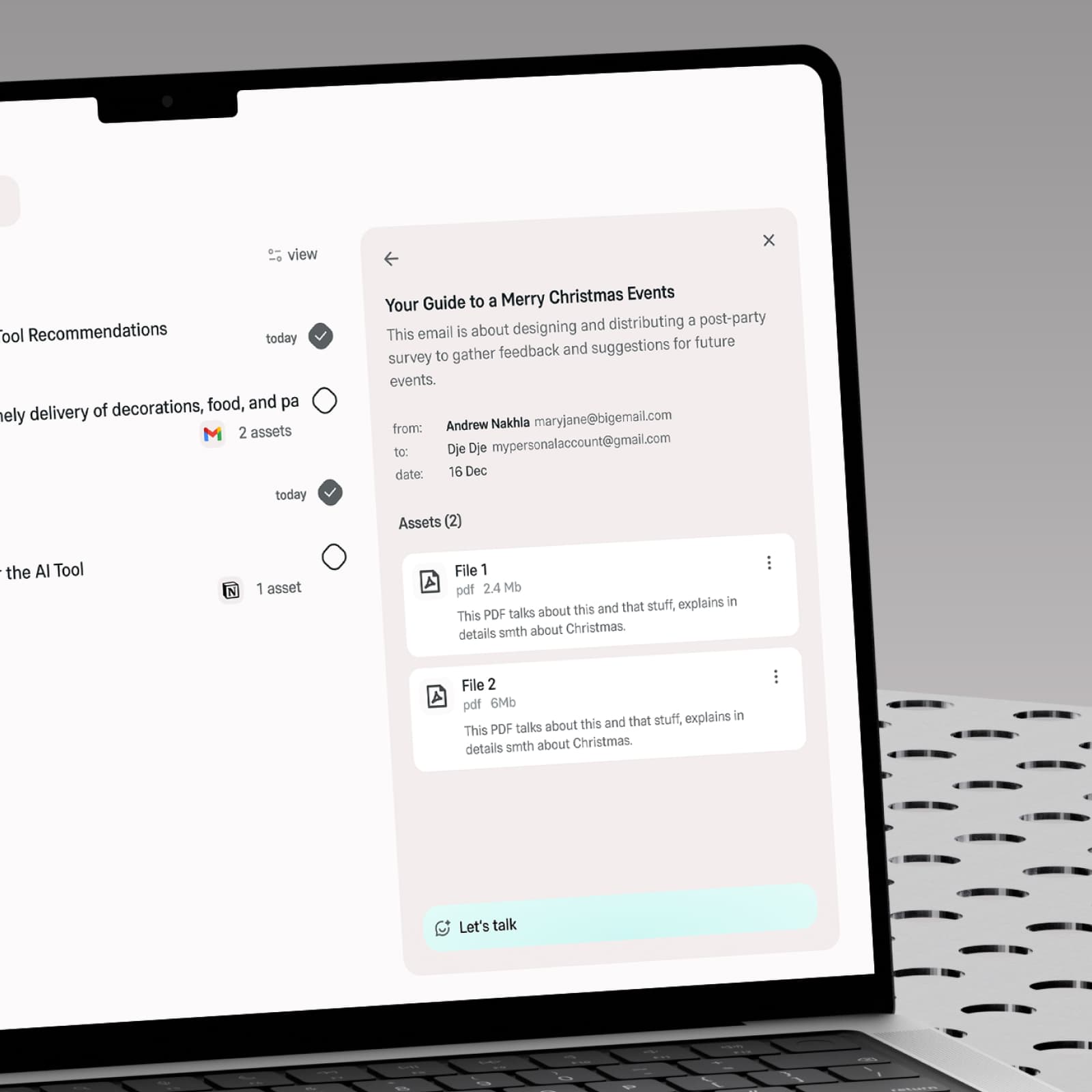

We are in the era of mixing UI and automatic assistant. Chat-based interface is the most common and basic medium of such unity with its awkward limitations (read email summaries in the chat?). Assuming that the LLM is fine-tuned correctly, I believe that the design process of creating interfaces changes from manually putting GUI components on the screen to orchestrating the information points into agentic workflows with user being a knowledge aka prompt guider. Hence, we need to empower our customer to be able to lead with the right instruments. Find the strongest of AI and classic API worlds and make them work and help each other progressively, as the priority is to help user deal with the problem and arrive to the goal, not the AI itself.